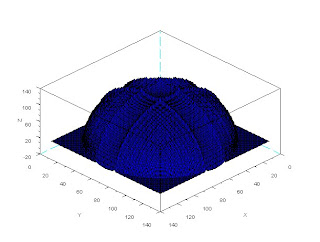

Original

Noised / Restored

The restoration was able to remove the "trace" effect of the blur but some additive artifacts still remain. The method is not in vain however, since it still was effective enough to make the text readable again.

I give myself a 10/10 because I was able to implement the algorithms successfully.

I give myself a 10/10 because I was able to implement the algorithms successfully.